Unified Robotics IV: Navigation

October 2018 – December 2018

This is a team project with Michael Abadjiev and Nicholas Johnson.

This project focused on the implementation of SLAM (simultaneous localization and mapping) using ROS. The provided robot is a Turtlebot 3 burger that is a 2 wheel drive robot with a LIDAR on top. The goal was to have the robot perform SLAM while mapping a maze and return to home when everything is explored.

- Using ROS and created program to perform SLAM in a small maze using Turtlebot3 Burger

- Utilized ROS’s network ability and created a distributed computing setup for high throughput on map data along with fast response on motion control.

- Path planning is updated in real-time along with the mapping update to better avoid obstacle.

- Multi-threaded motion planner and controller in a waypoint fashion for fast and smooth navigation that is also responsive to path update.

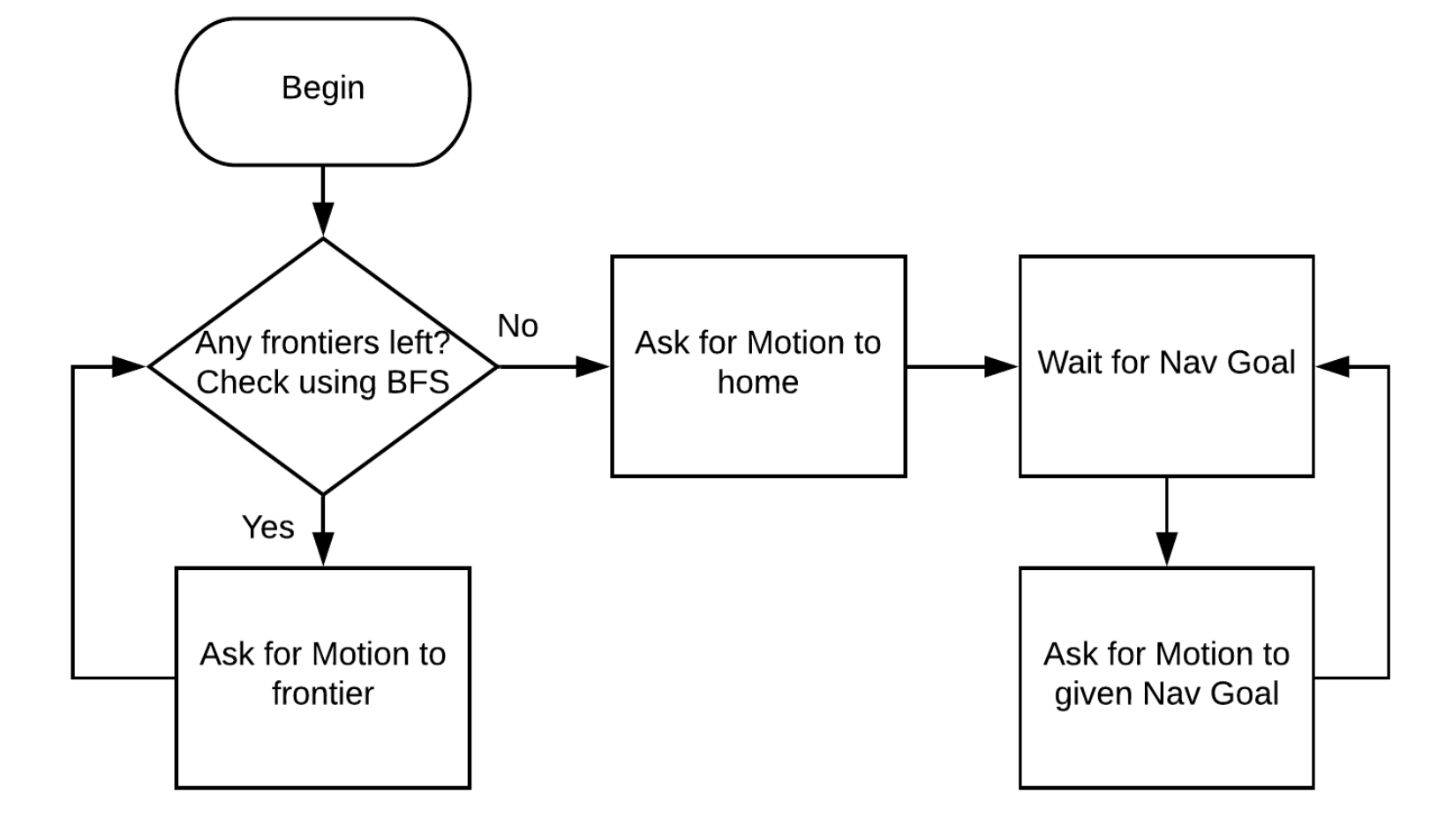

master flow chart

The overall robot operation is quite simple. BFS (breath-first-search) is used to find the next location for exploring, then A* is used to generate a path for robot to reach the location. Finally, robot drive according to the path to explore more area. Then the whole process just repeats until the whole map is explored.

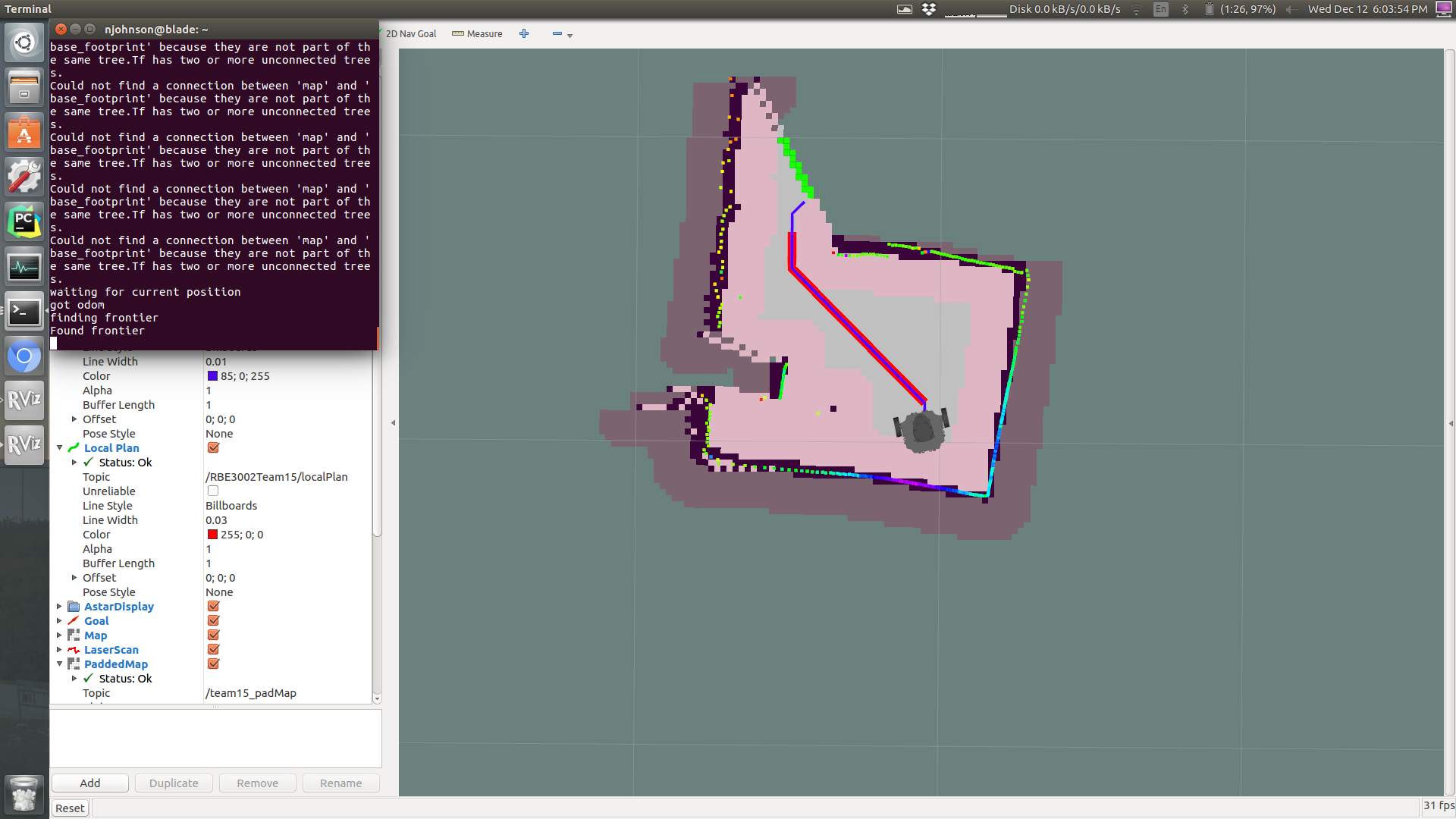

Wavefront search (green) and robot navigating towards unknown area

Some external ROS nodes such as Gmapping are used. In addition, we used RVIS as a graphic interface to display robot status and mapping data. To speed up the process, we also simulated everything on Gazebo during development. Using simulation like Gazebo bring two major advantage: experimenting with robot becomes much easier and we can easily try different maze setup.

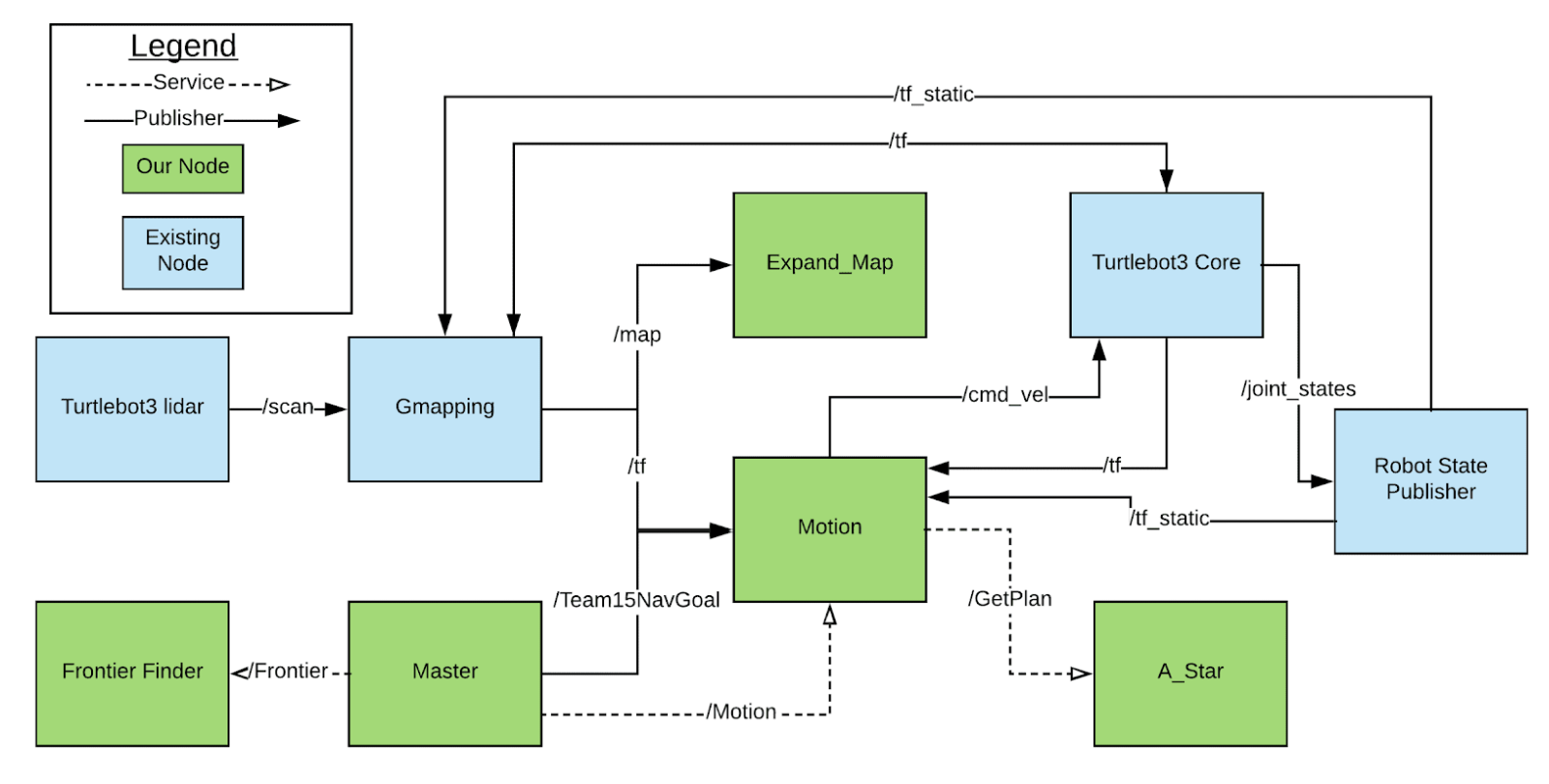

Connections between various nodes.

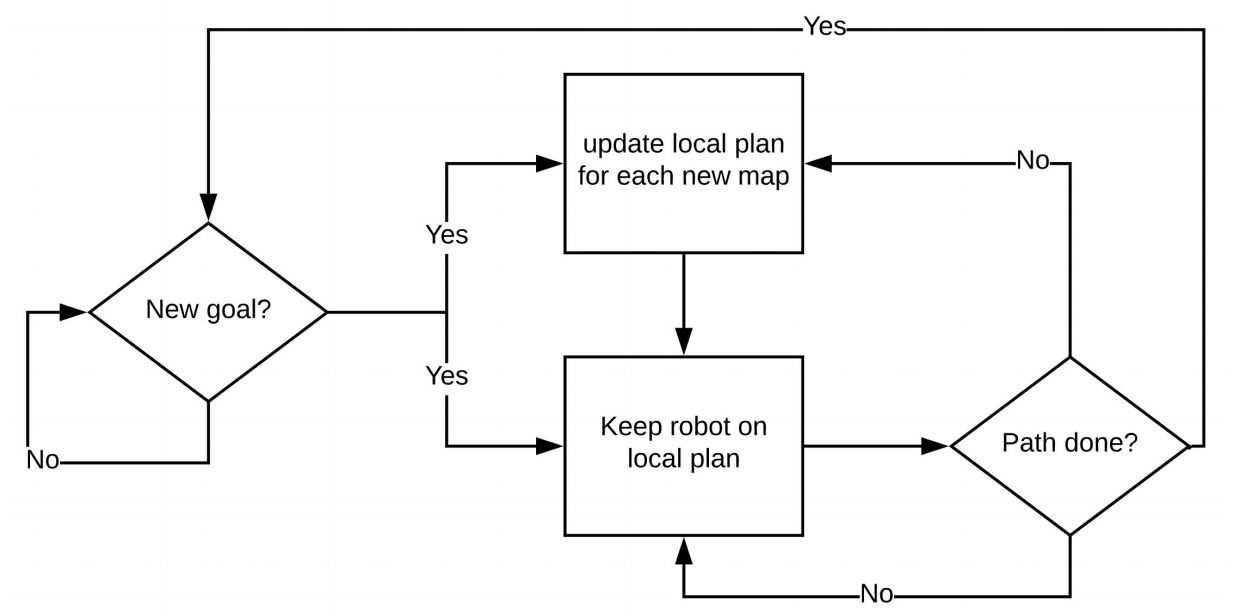

As the robot move forward, the mapping is updated with new sensor data along with robot’s own position. The localization for the robot jumps around a little as it corrects itself. This means if robot stick to the path planned at the beginning, it would likely to hit the wall. Thanks to the high performance of A*, the path can be actively recalculated whenever a new mapping is available.

To cope with the dynamic path, the motion control for this robot is put into three layers. The top layer is the A* algorithm that provides a global plan. On the lowest level, a simple motion controller decide robot’s linear and angular velocity proportionally with the error between current position and destination. In between them, a local planner will take the list of waypoints from the global plan, and feed them successively to the motion controller. The local planner will feed the next waypoint slightly before the robot reach the current one so the robot can maintain a smooth motion even when driving at high speed.

Flow chart for the local planning and motion control.

Flow chart for the local planning and motion control.

The three layers are separated into three nodes. Them are running in a asynchronous manner simply because each node requires a different update rate. Motion control needs fast response while path planning is only necessary when the mapping is updated. As a result, quite some effort is put into their inter-communication and signaling to ensure they work in harmony.

Taking advantage of the fact that ROS node use network to communicate and can support robot with multiple processor connected by Ethernet, we adopted a distributed computing approach. Since the node for mapping and path planning will use quite a bit of computing power, these nodes run on seperate laptops to get more computing power. While the motion control requires low latency, thus this node is directly deployed on the robot itself.