Autonomous Plant Watering with Mobile Manipulator

JAN 2024 - AUG 2024, NorthWestern-MSR

Main repo for this project is at plant-watering-agent

This project uses hello-robot stretch3 to perform full autonomous home plant watering. This project integrates NAV2, rtabmap for navigation and YOLO for object detection. ROS2 is used to integrate these major component together.

Using a well trained YOLO model combined with depth camera, the project is able to autonomously discover plants, pour water into the pot correctly without needing scene/tag setup ahead of time.

A custom wavefront frontier algorism is created which allow the robot to be “self-deployed” without needing user to drive robot around for discovering plants.

Full Demo

The video layout:

- On the right, is a third person view of the robot from an hand held camera.

- In the middle, is the output of YOLO model, which is also what the D435 camera head sees.

- On the left, is a 3d view of the environment with robot gathered information on it. Including 2D navigation maps, 3D Obstacles, Recognized objects (in Green/Blue Markers), planned path, etc.

System State Flow Chart

graph TB

idle["Idle State"]

frontier["Frontier Search State"]

explore["Scene Explore State"]

pickpot["Plant Selection State"]

navpot["Plant Approach State"]

idle --launch--> frontier

frontier --found reachable\nunexplored frontier--> explore

explore --roughly reached--> frontier

frontier --All Explored--> idle

idle --triggering event--> pickpot

pickpot --Select new pot \n to water--> navpot

watersearch["Watering Area\nIdentify State"]

water["Water Pouring State"]

navpot --reached proximity of pot--> watersearch

watersearch --found qualified\n watering area--> water

water --finished watering--> pickpot

pickpot --all plants watered --> idle

navpot --Failed to \nnavigate to pot--> pickpotThis flow chart described the top level states the robot will go through. Everything is autonomous except the triggering event for staring to water all plants. This is designed to be called by an external trigger, like a systemd-timer or user input as the robot have no botanical knowledge.

Stretch3

The mobile manipulator chosen is hello-robot stretch3. It is chosen for adequate sensor modules, integration with ROS2, large reach zone, and compact footprint.

There are some minor of modification made to stretch3 ros2 driver for it to better fit this project. A fork is made with the necessary changes.

As the robot’s two main joints are cartesian, it have a close form solution for IK. Since the moveit StretchKinematicPlugin is actually not compatible with stretch3, the manipulation task is done my manually solving for the joint value in a visual servo style.

YOLO

This project use YOLO for object detection and segmentation. Instead of using close form algorism to solve for the rim of flower pot to pour water into, YOLO is used directly to recognize the watering area. This will always work as we can ensure a top-down view of the flowerpot given the stretch’s camera head is always above its own arm.

Various YOLO model are trained for detecting flower pot and watering area. The initial dataset are exported from OpenImages7 using Fiftyone. Total of 10k per-labeled images are exported. Among which, a subset of 500 are selected for manually labeling watering area.

Various combination of dataset are tried to get the best training result. Within 10k flowerpot labeled images, 500 images are vetted, among with 180 images have watering area segmentation labeled, while the rest 320 images only contain flowerpot with no watering area inside.

differently trained YOLO model with real world recording from stretch3 robot:

The above video is a comparison of 4 different model trained with different groups of datasets and hyper-parameters. From left to right:

- Trained with only 180 images that contain both flowerpot and watering area, and without background images.

- Trained with 500 images which also include flower pot only images and a few background images without flowerpots. But without augmentation hyper-parameters (like rotation).

- Trained with same 500 images but with augmentation.

- Same parameter as pervious one, but the model is pre-trained with 10k flower pot images, then trained again with 500 images containing portion of flowerpot only images plus a few background images.

Labeling Tool

A self hosted CVAT docker is setup with its serverless plugin for semi-auto labeling. With the help of segment-anything model, the watering area segmentation labeling can be done in a timely manner.

Data Management

Since neither CVAT nor OpenImages7 directly export a YOLO compatible format for segmentation, a conversion tool is needed. There already exists a good and simple tool for it. However, I forked it and extended the feature to not only convert coco format to YOLO, but also help merge multiple datasets.

YOLO in 3D

As YOLO is a object detection for 2D image, it does not provide 3D object location. Inspired by prior work, a pair of ros node is created to integrate YOLO into the ros workflow and paired it with intel D435 RGBD camera, the depth module allowed us to directly convert 2D detections into 3D.

Rtabmap Nav2

Rtabmap is used for 3d SLAM using depth camera. It also provides important labeling of tall obstacles like chair that would normally be missed by 2D lidar.

The unique wavefront frontier explore is very similar to the classic one, except it do a collision check. With the extra collision checking, the amount of frontier that motion planer needs to validate would drastically decrease. Specially in clustered home/office setup, this feature would save lots of time from attempting to explore tiny corners.

Custom collision acknowledged wavefront frontier explore visualized

In the video, white dots are wave front, red dots are areas of collision checking, and blue dots are the discovered frontier

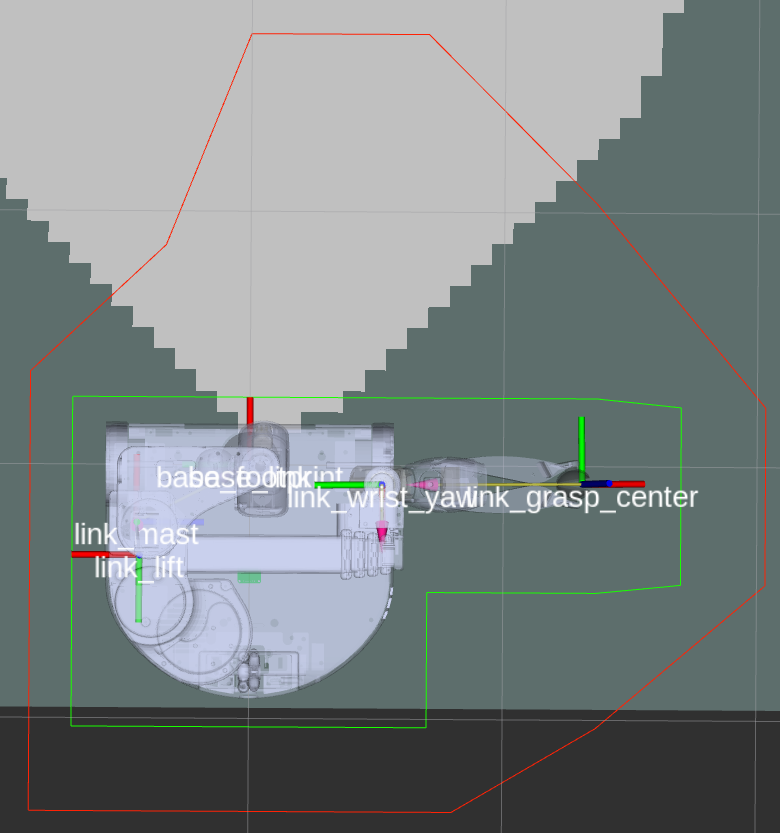

Nav2 is used for navigation planning and control. With the unique footprint of stretch, a new motion planner: Smac State Lattice is configured and used. A stretch3 specific lattice_primitives is also generated. Generally, this new planner allow a better global path being planned and involved in less robot being stuck with obstacles.

Stretch global footprint (red) and local footprint (green)

Spatio Temporal Voxel Layer

The spatio-temporal voxel layer is a newer alternative to the raytracing plugin for costmaps. This module is specifically designed for depth camera. By some clever trick using field of view and temporal decay, it claimed to reduce the computing cost a lot.

I have set this up for both global and local map, and it has been working robustly.

Watering pot

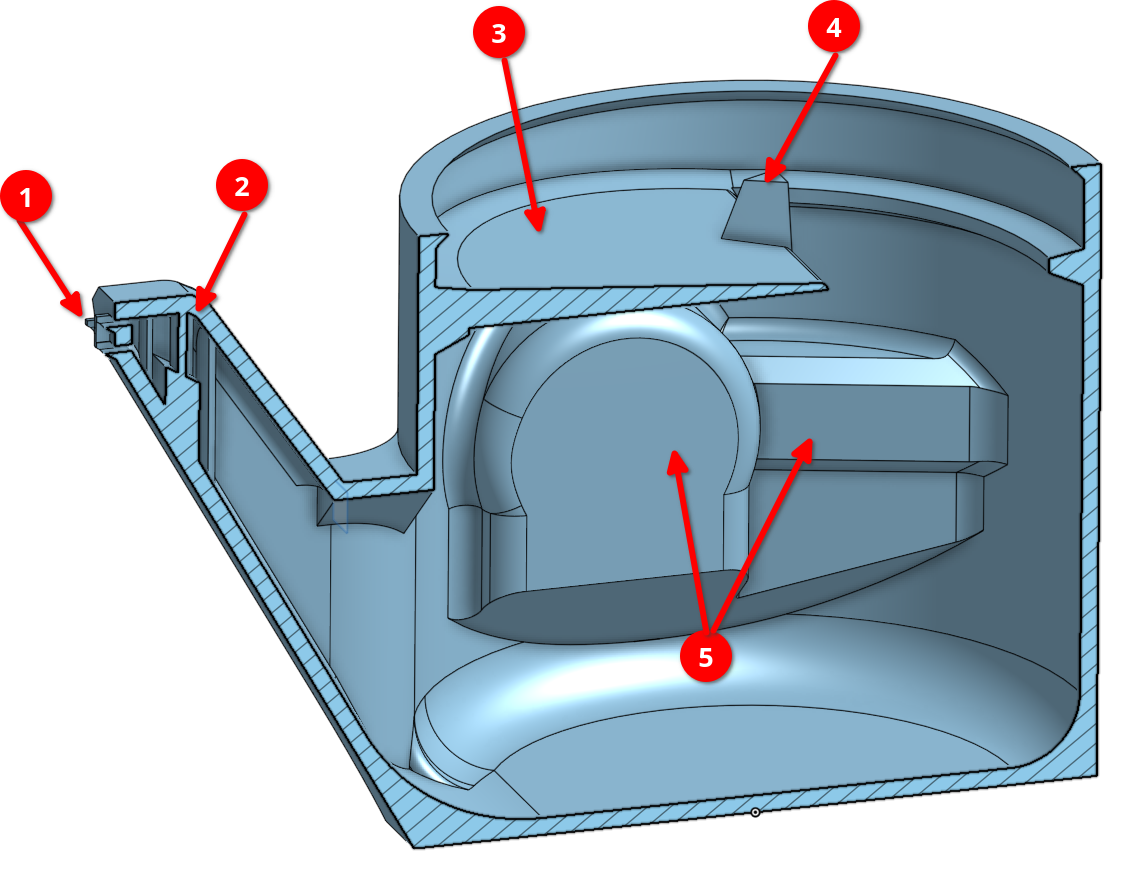

To keep things simple, decision was made to let stretch3 gripper hold a watering pot instead of adding pump or hoses. Thus a specific watering pot is designed to fit the stretch3 gripper. The design is made in onshape and available to public

Specially designed pot with design features labeled

- special feature to prevent watering clinging onto spout and drip down onto robot base.

- Internal criss-cross to slow down the flow, ensure similar flow rate at different fill level of the pot.

- lid guard plate, prevent water from dumping out when pouring water, but also allow filling it without separately opening the lid.

- Laminar flow breaker, to prevent Laminar flow created by the smooth guard plate from sealing the opening and causing water from shooting out.

- Special indent that specifically fits stretch3’s gripper. This shape ensure even if the gripper is not close very tight, the pot won’t be rotated or dropped.

The pot with its internal feature, is designed to be 3d printed. And since 3d printed parts are not water tight, a layer of polyurethane coating is apply to leak proof it.

Laminar flow is not always good

References

YOLO:

- https://github.com/Alpaca-zip/ultralytics_ros

- https://github.com/z00bean/coco2yolo-seg

- https://docs.ultralytics.com/modes/train/

- https://storage.googleapis.com/openimages/web/index.html

- https://github.com/ultralytics/ultralytics/issues/6340

CVAT:

Nav2:

- https://docs.nav2.org/setup_guides/algorithm/select_algorithm.html#summary

- https://github.com/ros-navigation/navigation2/tree/main/nav2_smac_planner/lattice_primitives

- https://github.com/SteveMacenski/spatio_temporal_voxel_layer?tab=readme-ov-file

rtabmap:

Stretch3: